The rules are important, and it is always necessary to ensure that the rules of moral judgment followed by autonomous vehicles are within the human morality.

In addition to technical issues, autonomous driving faces unavoidable non-technical issues – ethical choices. The most basic question is, can autonomous vehicles be able to make moral judgments instead of people? If so, how can we make a judgment?

Recently, a German team's research shows that human moral behavior can be described by algorithms, and these algorithms can be applied to self-driving cars, so that if a traffic accident occurs, the self-driving vehicle can make judgments in line with human moral standards.

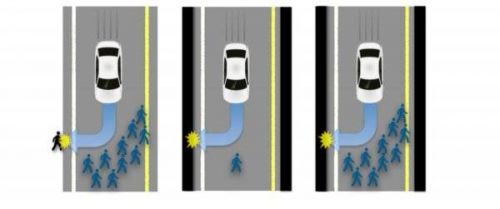

The study was conducted by the Osnabruck Cognitive Science Institute in Germany, using virtual reality technology to study human behavior in simulated road traffic scenarios. In the virtual scenario, 105 test subjects drove in the suburbs in the misty days, and suddenly they encountered unexpected traffic accidents. They decided to let anyone in the inanimate objects, animals and humans survive. Subsequently, the researchers conducted a statistical analysis of the test results.

In the past, it was believed that the moral judgments made in the inevitable traffic collisions depended on the specific situation and could not be described by algorithms. Leon Sütfeld, the first author of the study, said: "We found that the opposite is true. Human behavior in distress can be endowed by participants to everyone, animals or inanimate objects. Modeling the value of life."

In the debate over the ethical issues surrounding autonomous vehicles, this research is indeed positive. Artificial intelligence can learn the moral choices that humans make in difficult situations, so that autonomous vehicles can make human moral choices in similar dilemmas.

However, the limitations of this study are still very obvious. It is relatively easy to rank the life values ​​in inanimate objects, animals, and pedestrians. If you go further, how should you order between different pedestrians and between drivers and pedestrians?

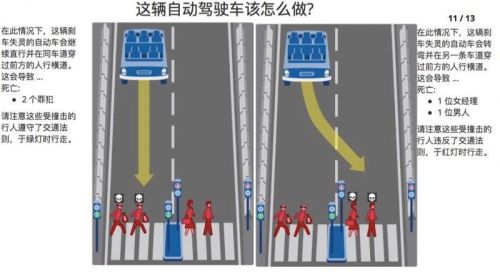

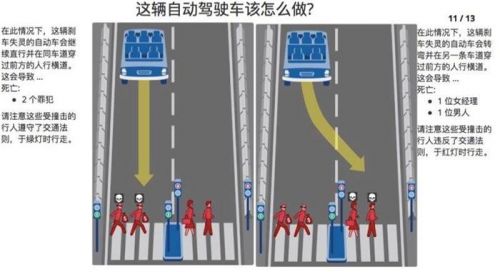

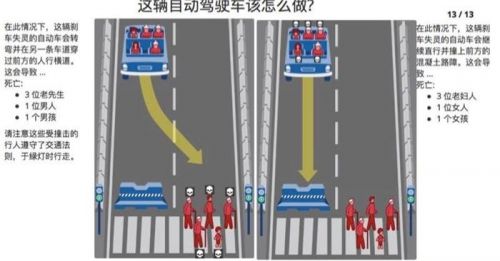

The MIT Media Lab (Massachusetts Institute of Technology Media Lab) has set up an "Ethical Machine" platform (http://moralmachine.mit.edu/) on the website to capture human possibilities for future machine intelligence (such as self-driving cars). A total of 13 different situations were designed for the opinions of various ethical dilemmas that will be encountered.

For example, a self-driving car with a brake failure will continue straight and cross the front crosswalk in the same lane, causing two criminals who have complied with the traffic laws to die, or will turn to another lane, causing two violations of traffic rules. The pedestrian died. How to choose?

For another example, a self-driving car with a brake failure will turn to another lane, causing the passerby to obey the traffic rules, or going straight into a roadblock and causing the occupant to die. How to choose?

Often, people agree that autopilots should minimize the number of deaths to save more lives.

But obviously this is an attitude of being out of the way. When the person who agrees with this view drives his own self-driving car, this setting does not give priority to protecting the owner's self-driving car. Will he still be willing to buy it?

At the same time, this is bound to fall into the famous tram problem. How do you choose an autonomous car between a pedestrian who obeys the traffic rules and a pedestrian who crashes into two traffic rules?

The questions to consider are, for example, to what extent should we give auto-driving cars such a moral choice decision? Are we expecting autonomous cars to imitate human moral judgments, or do we want it to follow a defined moral rule? Who can decide all this, vehicle manufacturers, car owners, the public, or the random selection of machines? Who is responsible for the possible consequences?

Although there are still many solutions to the many legal and ethical issues of unmanned driving, it is not possible to solve the problem. The research at Osnabrück University is a small step forward. The problem of moral choice is not solved, and the popularity of driverless cars will not go smoothly.

However, the first thing that can't be ignored is whether a moral judgment algorithm should be written into the autonomous driving car program, which is a moral dilemma. Therefore, the rules are very important, and it is always necessary to ensure that the moral judgment rules followed by autonomous vehicles are within the moral scope of human beings.

And if the moral choices that humans can't make are completely handed over to the machine, this is the power given to the artificial intelligence God, which will bring the same catastrophic consequences of opening the Pandora's box.

Or maybe the moral dilemma that humans cannot solve, and the artificial intelligence with independent learning ability can find the perfect solution in the future? Who knows?

Shot blasting is a conventional technology for metal surface treatment.As a kind of shot blasting material zinc-based Alloy has wide range of applications with high precision surface treatment,and is safer and more environmentally friendly in use.

Product specification: Ñ„0.3mm~Ñ„1.2mm

Purity: 99.995%min

Product packing: 10-20kgs(spool packing)

30-350kgs(drum packing)

Zinc Shot,Zinc Based Alloy Wire,Zinc Cut Wire Shot,Sand Blasting Zinc Shot

Shaoxing Tianlong Tin Materials Co.,Ltd. , https://www.tianlongspray.com